Algorithms : They are NOT boring!

The man stormed into the store, gripping the coupons as hard as he could -enraged by their presence inside his mailbox. His daughter, while not a child, was still an impressionable teenager.

How could Target, a $28 Billion-dollar general-store-chain company encourage such behavior?

"What is the meaning of this?!", roared the man at the manager, as he placed the coupons on the desk platform with a thump.

"Excuse me, sir?" asked the manager, in utter confusion about what had just happened.

"My family has been receiving maternity and baby care coupons for the past few weeks. Considering how my wife and I have a teenage daughter, I would like a valid explanation as to how why these coupons were sent! Is Target suggesting to my daughter to get pregnant at this age?!", continued the man, his anger not subsiding.

"Not at all, sir. I'm sure this has been a misunderstanding from our side. In fact-", replied the manager.

The manager apologized profusely, and offered the man a coupon book devoid of such products as compensation for the inconvience caused.

The man accepted the coupons on the condition that such coupons would not be sent to his house ever again. The manager agreed without hesitation.

A week later, the same man walked into the store. This time, his body language was quite different.

'Oh no.', thought the manager. Had they mistakenly sent those coupons again? He was definitely in trouble now.

"I'd like to apologize for my behavior last week.", said the man, his tone awash with embarrassment.

"I understand sir. In your situation, I would have done the same.", replied the manager.

"No, you don't understand. Target wasn't sending those coupons by mistake. Apparently, there are some things that have been going in my house that I haven't paid attention to.", replied the man.

( The above is a fictional depiction of a true story that was published in Forbes .)

Target, had figured out that the man's daughter was pregnant by simply observing the products that she was purchasing and then mapping that to products that expecting-mothers acquire - like non-scented soaps, mineral supplements and cotton balls.

How did Target figure this out? Algorithms.

Algorithms aren't some theoritical concept that is restricted to Computer Science classrooms. It does not begin with 'Insertion Sort' and end with 'K-dimensional Trees'. In fact, we are surrounded by algorithms.

From purchase tracking, navigation, and voter analysis to market research, trading and even malicious activities, algorithms cater to every need.

In this post, I'd like to move away from the following definition that is regurgitated in every textbook :

'A finite set of unambiguous instructions that, given some set of initial conditions, can be performed in a prescribed sequence to achieve a certain goal and that has a recognizable set of end conditions.'

Before arguing why I don't agree with it completely, there are some great characteristics that we can derive from this definition.

They are :

Finiteness

An algorithm terminates after a certain number of steps.

Unambiguity

Algorithms shouldn't be open to different interpretations.

Procedural

Algorithms follow a specific procedure to produce a tangable result from initial conditions. These steps are sequential in order.

While these characteristics are often enough for a examination, I feel they paint a dull picture. Algorithms are much more complex than an aggregation of steps.

The idea that algorithms are objective is wrong.

More accurately, the idea that all algorithms are objective is wrong. Think about it - when was the last time that you saw sorting of numbers as the only responsibility of an employee?

Let's take the example of train routes. In a classroom environment, the question would most likely ask you how to ensure smooth transitions of 2-3 trains amongst multiple stations.

But practically, there are a lot of other factors to consider. What if there are mechanical failures? Emergency medical issues? Lack of communication?

I'm sure you have thought of a few more. Algorithms, thus are also "biased", because humans create them. I might give more importance to lack of communication and factor that into my algorithm while you give more weightage to mechanical failures.

Let's look at that Target story again.

What if Target's coupon algorithm didn't factor in unscented soaps and lotions as an indication that the buyer might be pregnant? How would the story change? Would there even be a story?

I hope this at least displays that algorithms don't encompass all cases.

So, we've uncovered the first hidden characteristic of algorithms : natural bias. Let's explore the second one.

Algorithms should be scalable.

What does this mean? Basically, they should function efficiently even if the conditions change from serving one customer, to a thousand, to a million and more.

Now I know what your're thinking. Scalability should be a consideration of programs right?

I agree. However, they should also be a consideration when creating/analysing algorithms. If your algorithm functions at 99.99% efficiency for 100 customers but drops down to 30% when it comes to handling 10,000 - do you think your're going to stay in business for long?

I think understanding scalability, is vital to understanding 'Asymptotic Notation'.

Asymptotic Notation

I won't elaborate a technical definition here - you have enough textbooks doing that. But I'd like to help you develop an intuitive understanding of Asymptotic Notation.

Asymptotic Notation is simply a method of comparing different approaches that can be undertaken to solve a problem.

Imagine a simple cupcake store. For simplicity, assume that they have cupcakes corresponding to the colors in the abbreviation 'VIBGYOR'.

Now, let's say that three customers walk in asking for three different cupcakes. The first one asks, for a violet cupcake. The second, an indigo. The third however, asks for a yellow.

As a human, you flawlessly traverse to where the yellow cupcake is and serve it to the customer. But computers don't think like this. They can't "anticipate" where yellow is because they always traverse in a sequential manner. If a computer did this job, it would go through violet, indigo, blue, green and then yellow all the while comparing at each step.

So what? There's only 7 colors! Traverse all you want!

True. There are only 7 colors. But what if the colors expanded to the millions of colors that the human eye can see? Would you still traverse to the 4,500,234th color and compare the ones before it?

This approach, of traversing the line of colors, is said to be of computational complexity O(n). Computational complexity refers to how much time the approach takes before finding the answer. So here, we have to traverse 'n' colors before finding the cupcake the customer wants.

If the customer wants a cupcake that's in the millions then you can't use this approach. However, what you can do is before opening shop you organize the colors based on their resemblance to the original VIBGYOR colors. Now, you can fetch the appropriate cupcake within 8 comparisons ( 7^8 = 5,764,801 ).

This is known as O(log n) computational complexity, where the base of the logarithm varies accordingly. So, we went from millions of comparisions to less than 6! Now that's efficiency!

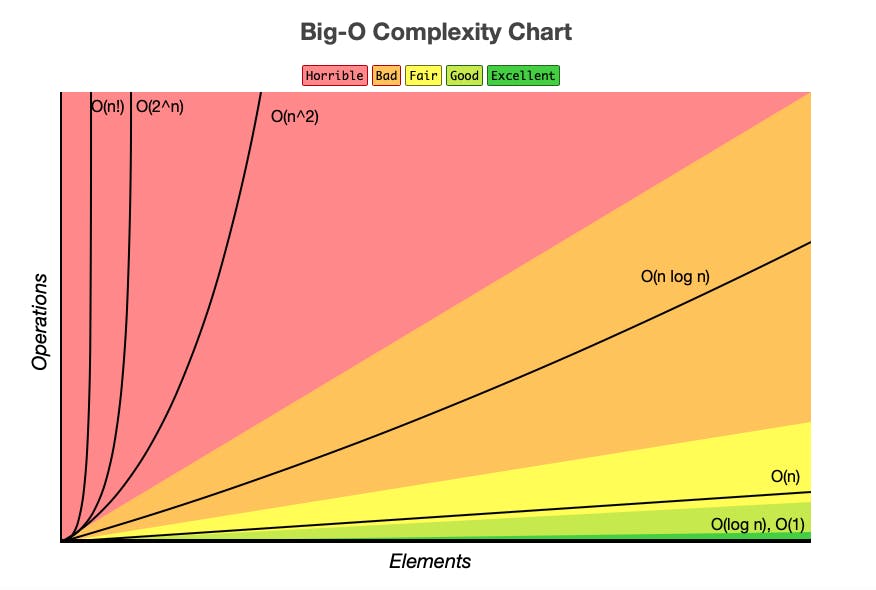

The chart below illustrates the different complexities :

Alright, so we've talked about how easy it is to compute the result. Now, let's see how the storage requirements affect the algorithm.

Technically, memory can be limitless. But realistically, we can store only so much. Which is why algorithms have to consider how much space they require. This is known as 'Space Complexity'.

A good analogy of this would be of browser caches. Whenever you visit Amazon, they store some information regarding the client on the client's machine in the form of cookies (seriously, try visiting Amazon by disabling cookies - you'll get an error message! ). They have to be very careful when deciding what to store while also maximizing the customer's experience on the website.

Imagine if their cookies stored GBs worth of data. They'd be out of business by the time you could say 'Amazon'.

Going back to our cupcake example, I think you'll agree that we can't store all colors in memory (RAM). That would be ridiculously expensive. However, if we bring into memory the subset of colors that the appropriate color belongs to - the approach becomes more economical.

Thus, algorithms should try to balance computational and spacial complexities. With the recent advancements in storage technologies, it is okay to relax a bit when it comes to spacial complexity.

When it comes to industries like "Big Data", it is vital to balance these complexities.

Alright! That covers the characteristics of algorithms. To summarize them :

- Finiteness

- Unambiguity

- Procedural

- Natural Bias

- Scalability

These characteristics are crucial, but they are not enough for classifying algorithms. There are different paradigms when it comes to classification of algorithms, as detailed below :

- Divide & Conquer

- Greedy Approach

- Dynamic Programming

- Backtracking

Linear algorithms can probably be categorized as "Brute-Force", but for simplicity sake I'll classify it under Divide & Conquer ( divide the problem into n subsets and then compute each one individually ).

Divide & Conquer

Here, the algorithm solves the problem by dividing it into 'n' subsets. The strategy that was used to find the 4,500,234th color is an example of this paradigm.

Searching algorithms like binary, ternary and fibonacci search are examples. Dividing into 2, is quite intuitive which is why the 'Divide & Conquer' paradigm is found as early as Babylonia ( 200 BC ).

Quick sort, Merge sort, and Strassen's matrix multiplication are examples of this paradigm.

Historically, this paradigm has not been restricted to algorithms.

Greedy Approach

Greedy Approach is used when a reasonable solution is to be found within a certain timeframe. The traveling salesman problem, wherein a salesman has to go to the residences of multiple people across towns in the least amount of time, is a fine example.

Instead of focusing on collecting information on how to find the global optimal solution ( the most efficient solution ), greedy approach focuses on finding local optimal solutions. This could result in a global optimal solution, a close enough solution or something far from it.

Personally, I find greedy approach to be an algorithmic implementation of a mesh of "common sense" and "just do it"!

For those of you enrolled in a data structures and algorithms course, you might have heard of Prim's and Kruskal's algorithms. These are examples of Greedy Approach.

Dynamic Programming

Dynamic Programming is an algorithm paradigm where we there is a dependency between sub-problems, and thus we consequently store the results of the initial problems to solve the latter problems.

Let's say that you are given two equations :

y = x + 4

z = y + 3

Now, you are asked to compute the value of z for x = 1.

First, you compute the value y for x = 1 i.e. y = 5. Next, you substitute that value in y-z equation to get z = 8.

This act of storing the previous result ( y = 5 ) to get the final result ( z = 8 ) is known as dynamic programming. It is dynamic in the sense that all results are computed at run-time i.e. when the program executes. It is easy to think of it as computing results on-the-go.

As you can imagine from the example above, Fibonacci sequences are an example of dynamic programming.

Backtracking

Backtracking is built on checking conditions. The 8-queens problem in chess is a great example.

Basically, you are given 8 queens which are to be organized across the chess board in such a manner that none of them attack each other.

How would you solve this problem?

You begin by placing one queen at a time, checking that no attacks are possible. If they are, you take back that queen from the particular location and search for a new position.

Backtracking is very helpful in solving constraint satisfaction problems, like crosswords, sudoku and even forms the basis of programming languages like Prolog.

I hope that this has helped you to look at algorithms as an interesting concept rather than something that you simply write about in exams! Feel free to add your own thoughts - I'd love to know more!

If you liked this and want to see similar feeds, you can follow me on Twitter.