How we brought down the response time of Hashnode to < 100ms

One of my goals for 2019 is to speed up Hashnode significantly and make pages load faster. Hashnode is a content driven website and majority of our traffic is from Google. About 30% of the traffic is from mobile devices. So, you can see speed is as important as the quality of content ... and we want to be really fast. 🔥

So, we decided to upgrade the performance in 5 different stages such as following:

- Speed up post pages for signed out users (and Google)

- Speed up home page for signed out users (and Google)

- Improve user feeds -- For logged in users

- Upgrade API responses to speed up client side rendering of posts

- Upgrade various other APIs

Let's see how we did it step by step.

Upgrading Node.js API response time

So, the first step was to do an audit of all the important APIs. In this process, we fixed a few cases where DB indexes weren't set up properly. This part was simple and helped us save couple of milliseconds.

Since we use one single API for both server side (SSR) and client side rendering (CSR), we were making one extra HTTP roundtrip for every request. For example,

export default class Post extends React.Component {

static async getInitialProps({ req, res, query }) {

const posts = await store.postStore.loadPost(id); // This makes an HTTP call to `/ajax/post/:id` endpoint

}

}

Note: We are using React and Node.js for Hashnode.

The loadPost() function is called every time you access a post page. This is fine for CSR. But in case of server rendering, we incurred the cost of two HTTP calls:

- To fetch the root document

- To fetch the post via an HTTP call (server to server call)

HTTP calls can be expensive since a significant amount of time is spent in various steps such as DNS resolution, sending HTTP headers and cookies, TLS handshake and so on. The solution was to prefetch the required post data via a normal DB call and stick the object to req object so that when getInitialProps executes, it already has post.

We did the above for all our feeds, post pages and some other APIs and we reduced latency by 10-20ms right there.

Reducing TTFB (Time to First Byte)

You can optimize your Node.js APIs, MongoDB queries and even implement an additional layer of caching using Varnish or Redis -- but it's unlikely that your pages will load within 100ms for all users. This is simply because of latency introduced due to geographical distance between your server and users. The only way to beat the latency is to serve content to your users from a Data Centre (DC) that is closest to them. Our servers are in the US. But it's impractical for us to build a geographically distributed website and allow reads from local regions.

The trick is to use a CDN and cache content in local DCs across the globe so that content is delivered to your users with minimal latency. I examined various CDN solutions, but liked Cloudflare the most. It's also super easy to get started with CF. You just need to point your nameservers to CF and it will start caching your static assets. For HTML and JSON caching you need to do a bit more work, but it's fairly straightforward.

So, let's see how we reduced our TTFB with the help of Cloudflare.

Caching post pages

When someone accesses a post anonymously (without signing in), we send the following Cache-Control header in the response:

cache-control: public, s-maxage=2592000, max-age=0

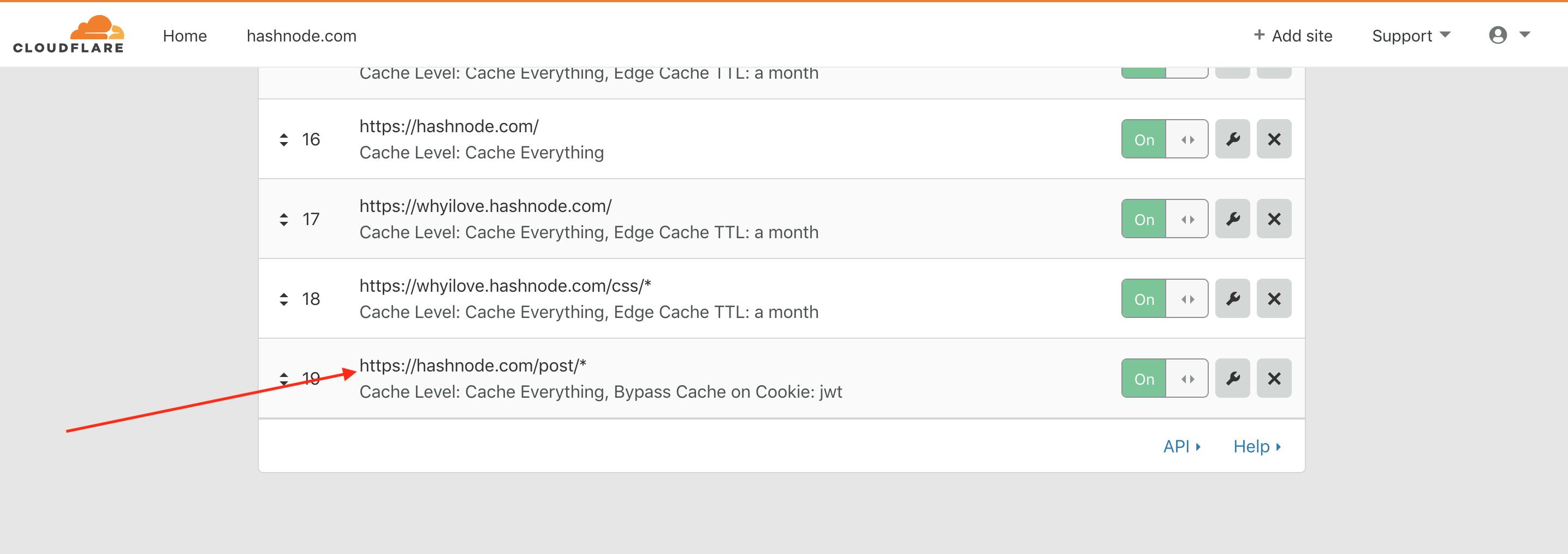

By default, Cloudflare caches only static assets such as CSS, JS etc. It won't cache HTML response straightaway. You need to add a page rule using Cloudflare's dashboard to instruct it to cache non-static responses. Here is how our page rule looks like:

So, any URL that matches pattern /post/* is going to be cached by Cloudflare. The TTL of the cache depends on the max-age or s-maxage header sent by the origin server. In this case s-maxage is 2592000 (1 month). So, this means the post pages are going to remain in CF cache for a month. I have also configured the page rule to bypass cache whenever there is a login cookie (jwt) in the request. As a result only logged out users and bots like Google see a cached page.

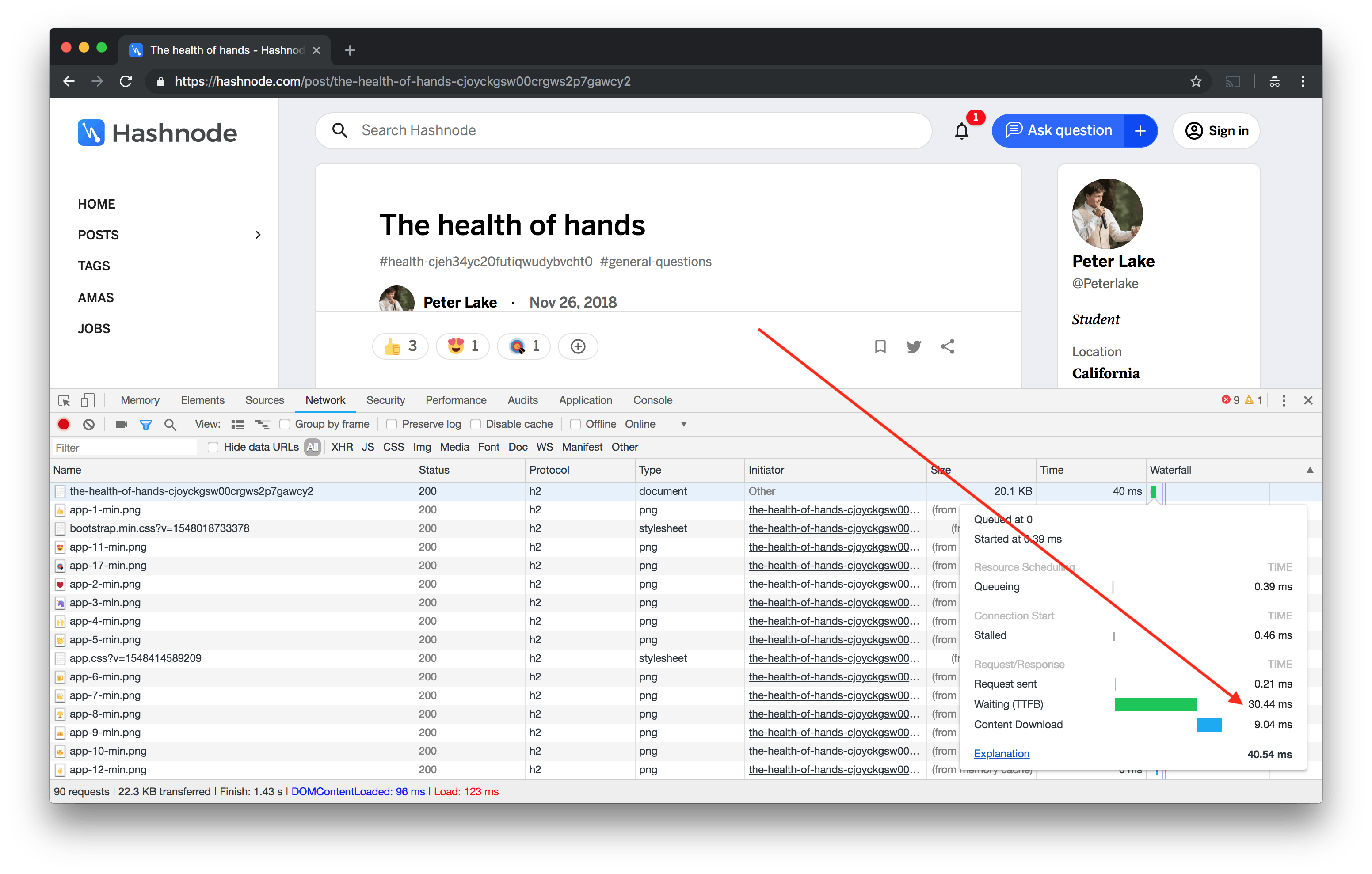

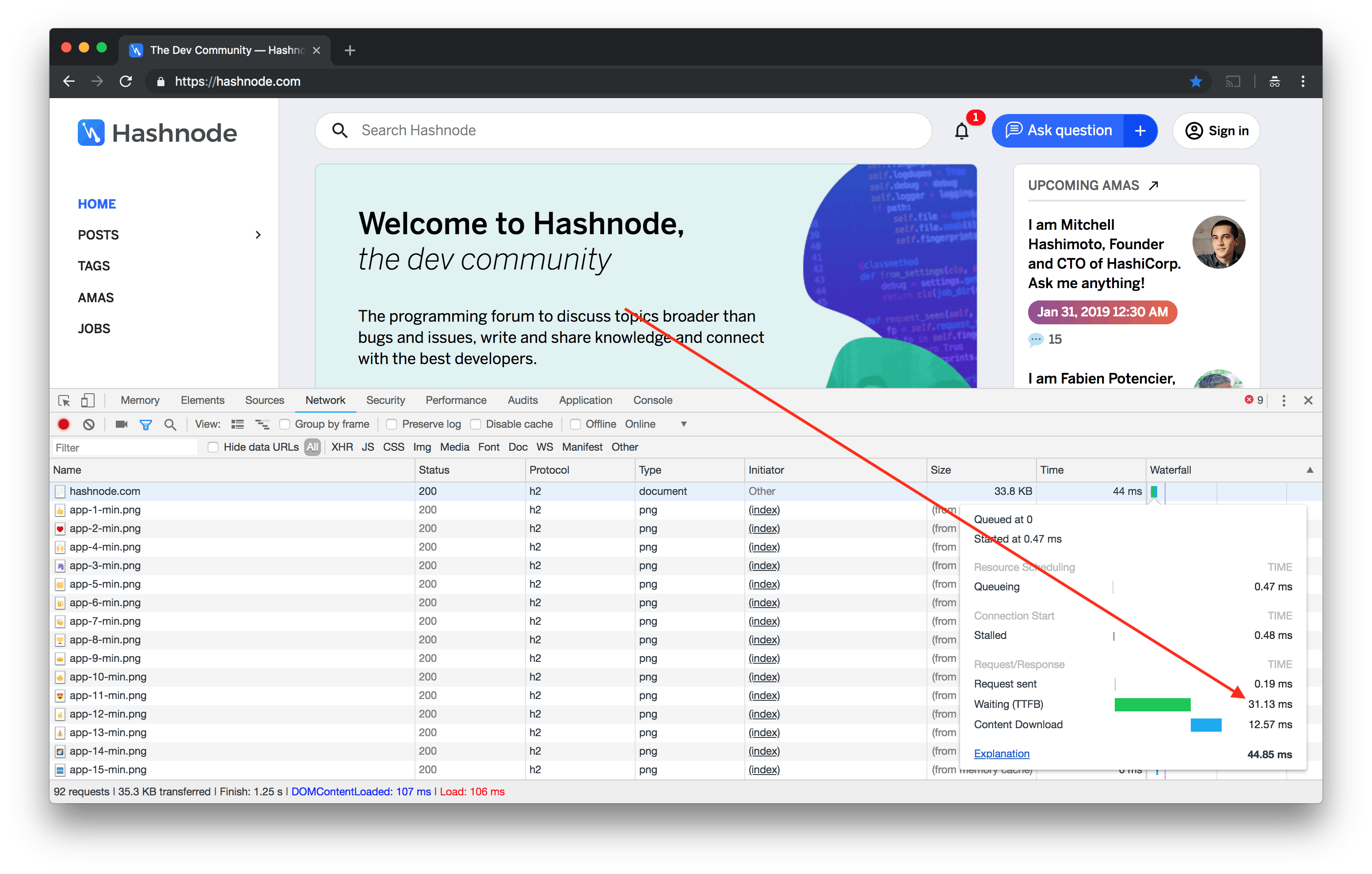

Cloudflare has 150+ DCs across the globe. So, when a reader requests a post page, they see content from a location that is closest to them. This reduces TTFB substantially. For example, here is the TTFB value for a post when accessed from Bangalore:

The TTFB is about 30ms and the root document loads within 40ms. That's insanely fast 🔥.

Protip 💡: Cloudflare won't cache a response when there are cookies in the response headers (Unless you specify Edge Cache TTL in page rules). In the beginning I spent days wondering why pages aren't cached in the first visit. After examining closely, I realized that express-session was creating sessions for logged out users as well. So, we had to do a bit of work to make pages cookie-less for anonymous visits.

What about cache purging? We use Cloudflare APIs to purge cached posts when they are updated or receive new activity.

Caching home page & user feed

Logged out users see hot discussions when they visit Hashnode (hashnode.com). So, it makes sense to cache it as well. But it's a bit tricky since logged in users see a personalized feed at the same URL. So, how do you cache the homepage which serves two different kinds of content to users?

Cloudflare has something called Workers that lets you run arbitrary JavaScript on their 150+ Data Centres. So, you can replicate pretty much anything you usually do with a config language like VCL (and even more) with Cloudflare Workers and be as creative as possible with routing.

With the help of workers it's easy to add custom routing and caching strategies to specific URLs. Here is what our code looks like:

addEventListener('fetch', async event => {

let request = event.request

const userId = // Find Hashnode userId from cookie

const isJWTPresent = request.headers.get("cookie").indexOf("jwt=") !== -1;

if (isJWTPresent && !userId) {

const url = "hashnode.com?noCache=true";

event.respondWith(fetch(url, event.request))

return

}

if (!userId) { return }

const url = "hashnode.com/u/v1/feed" + userId;

let newRequest = new Request(url, request);

event.respondWith(fetch(newRequest, { cf: { cacheEverything: true } }));

return

})

So, when you hit hashnode.com the above code executes and does the following:

Checks whether a login cookie and a custom

userIdcookie are both set. For users who do fresh login, we set two cookies:jwtanduserId. For old users there may not be auserIdcookie in the first page load. So, if that's the case the code returns a non-cached version of the page.If neither

jwtnoruserIdcookie is set, it means this is an anonymous visit. In that case we simply let the request proceed as usual by usingreturnand our normalCache-Controlheaders kick in and cache the content for 6 hours. So, subsequent home page visits result in a cache HIT.If both the

jwtanduserIdcookies are set, we forward the request to an internal Hashnode endpoint that takes theuserIdas a request param and sends the HTML containing the specific user's feed. This is cached for 8 hours and is controlled by cache control headers. Every time the feed is served from cache, we check if the feed has changed, purge the cache and update the content once the page mounts.

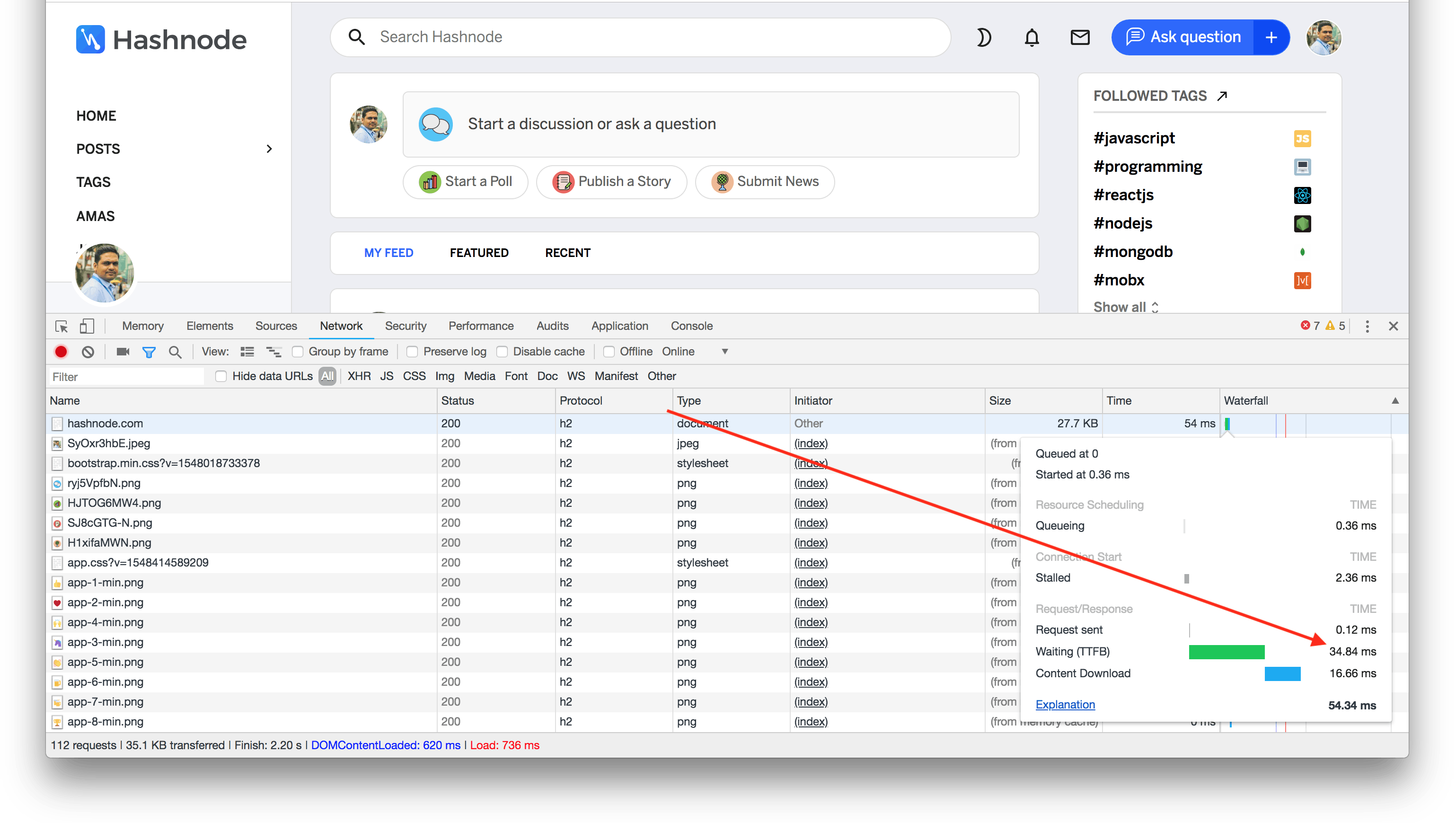

This has a huge impact on TTFB and overall page load time. For a logged in user, the TTFB value is typically 30ms and page takes about 50ms to load.

For a logged out user, the values are similar.

So, the page load is pretty much instant - almost as if you are on localhost. 😃

We do one more cool trick to make rendering faster. With the help of Addy Osmani's critical, we extract the critical CSS and inline it in the HTML itself. As a result, there is no extra roundtrip to fetch the CSS. Once the page loads, we load the rest of the CSS asynchronously. We are yet to apply this technique to the other pages though.

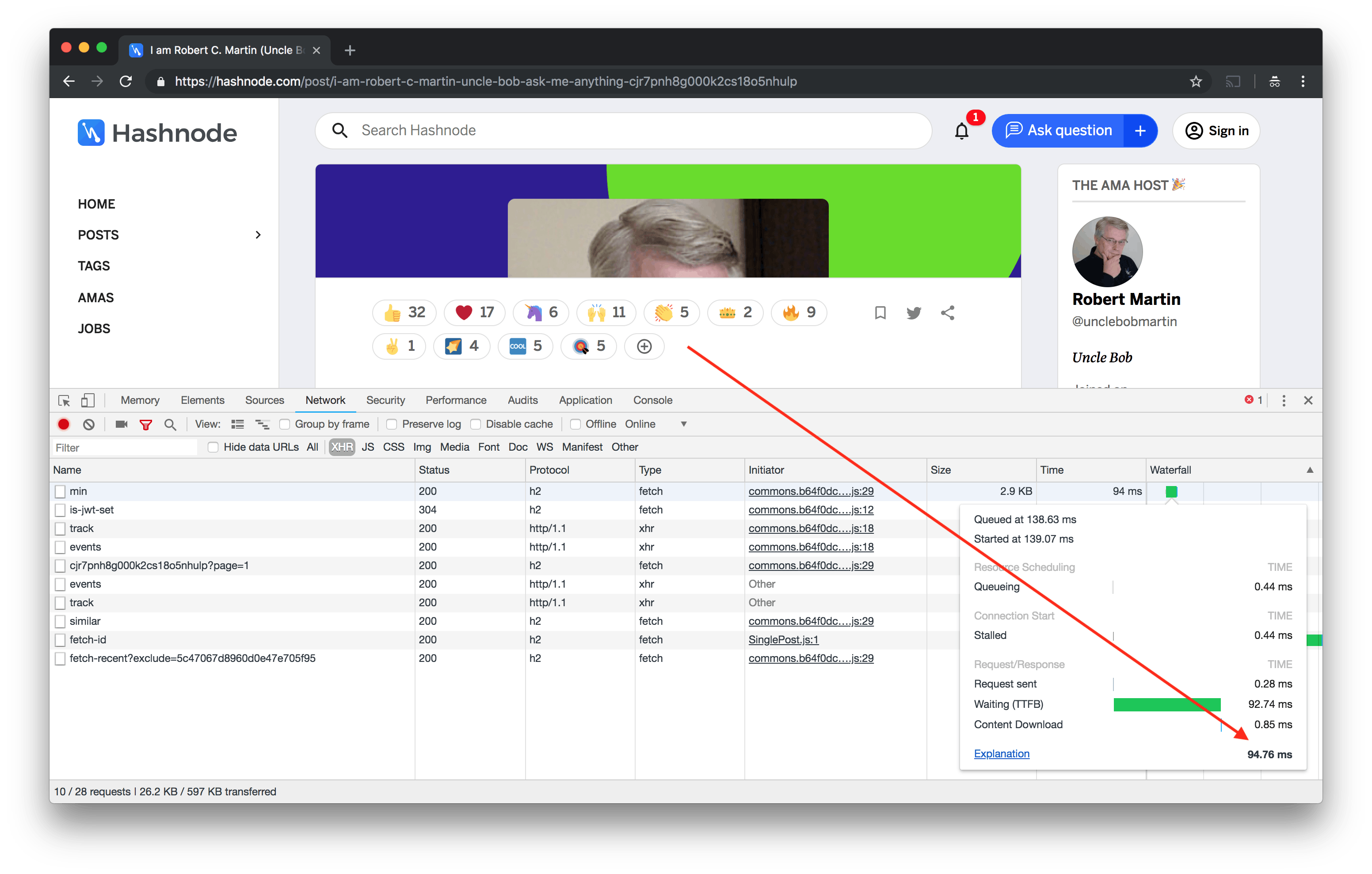

Speeding up frequently accessed posts during soft loading

When you load Hashnode and click on any post, it results in a soft loading (no hard reload). Under the hood, we hit an API to fetch the required post, render the page client side and switch the URL through pushState. To speed this up, we are now doing the following:

Make an API call to

/ajax/post/:id/minand cache the response on Cloudflare for 7 days. As the route's name suggests, we fetch only minimal content in this request such as post title, content, tags etc. If a post is frequently accessed, it will result in a Cache HIT most of the times.Switch the URL and render the post page so that users can start reading content.

Load the full post and replace the old content with the new one. This is to ensure that the values such as upvotes, responseCount, responses etc are up-to-date.

In addition to the above, when the page loads we prefetch the minimal responses for the first 5 posts during browser idle time. A handy 1KB tool called quicklink helps us do this.

And as a result post loading is instantaneous:

Even in case of posts that are not prefetched (e.g. Hot Discussions), you will not see any noticeable difference in speed. As the API response is cached on Cloudflare, the posts will still render within 100ms.

We have also cached other APIs such as hot discussions, trending stories, AMAs etc with a few hours of TTL. This has dramatically reduced load from the server as around 80% of our content is now served from Cloudflare. We intend to cache various other pages and APIs as we move further.

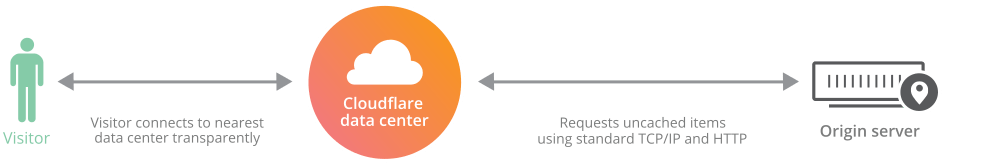

Railgun Optimization

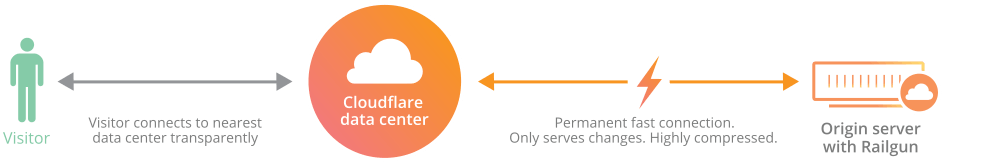

Cloudflare has another interesting feature called Railgun. It further boosts the speed of uncacheable content by compressing server response and sending only diffs from origin.

If you look at any feed on Hashnode, you will realize that between two successive requests the change in HTML isn't much. Only a few key areas change depending on the current user and posts. So, instead of sending the whole HTML every time, railgun only sends compressed diff to the edge servers from where HTML is generated and served to the users.

Here is how it looks visually:

It requires installation of memcached and an agent on your end, but other than that it's fairly straightforward. You can read more about Railgun here.

In addition to the above, we have implemented usual best practices such as disk caching of assets, prefetching of bundles and caching them, caching images and other similar resources with higher TTL and so on.

According to PageSpeed insights our performance score is at 99. We will get to 100 soon. :) However, we still need to work on the performance on mobile devices.

Cloudflare plays a big role in reducing our latency and improving TTFB. So, feel free to check it out. They have a free tier which is sufficient for most small and medium scale websites. If you need more features, you can always upgrade.

So, what's next?

We are doing the following immediately to boost the performance further:

- Inline CSS for post pages

- Find potential improvements and get to performance score of 100 on desktop.

- Improve score on mobile

- Implement AMP for post pages so that users from mobile devices enjoy an even better experience

Are you seeing the difference in speed on Hashnode? Let me know your thoughts in comments below. 😀