Image and Video Stream Analysis using AWS Rekognition , Serverless Stack and more...

Usecase

Amazon Rekognition provides very fast and accurate face search, allowing identifying a person in a photo or video using repository of face images and/or videos. This image based identification can really get rid of some of the manual processes which are currently being used to track/identify such cases and will improve the efficiency of the system overall. There are plenty of usecases which fall under AWS Rekognition and typical examples of image/video analysis range from identifying criminals to sex trafficking investigations to customer KYC and many more, see this aws.amazon.com/rekognition/customers

In this writeup our aim is to use the image analysis as well as live video stream analysis feature of AWS rekognition to search/identify a previously registered face.

High Level System Architecture

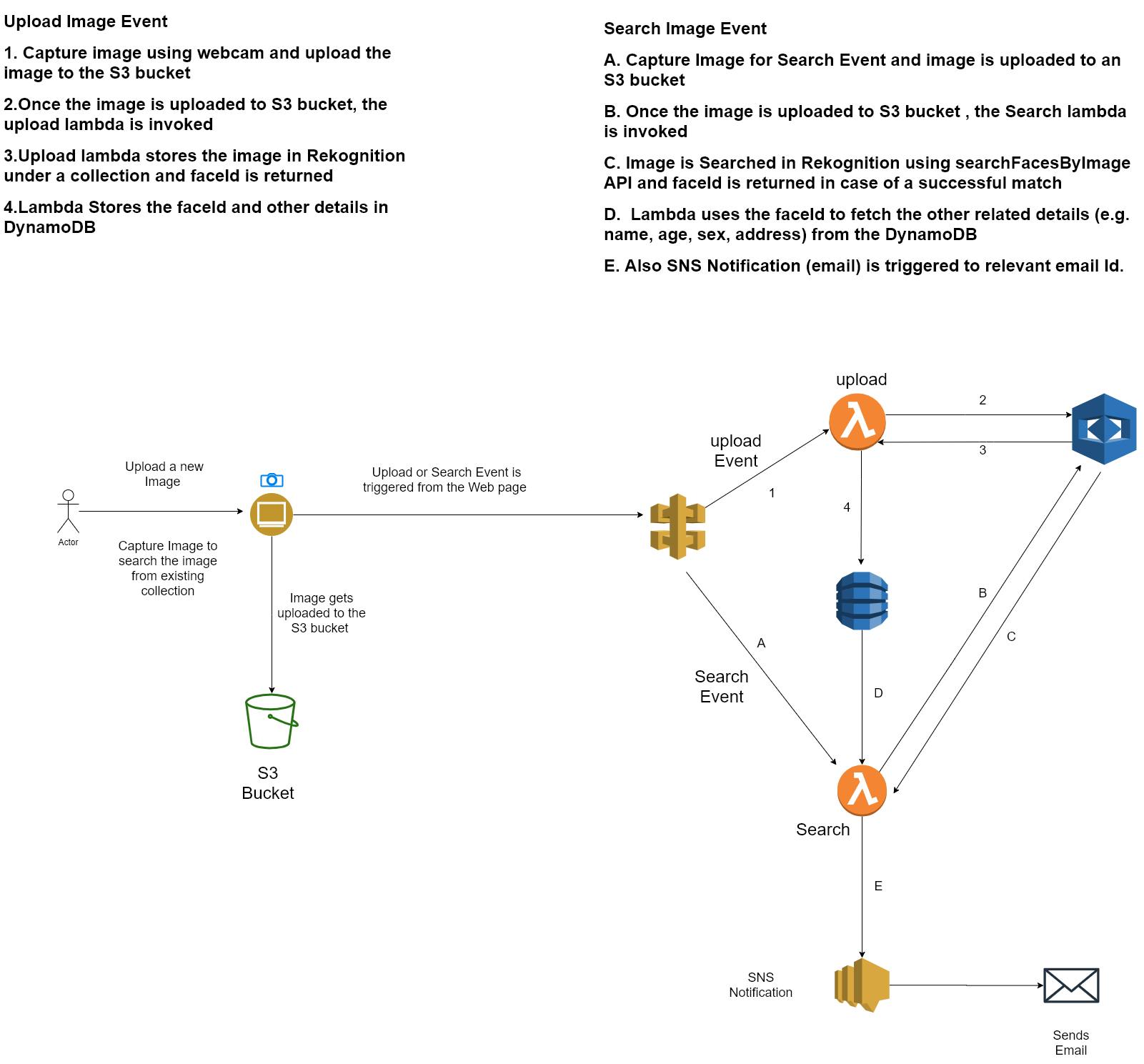

The system architecture is divided into two parts :-

- Capturing images using static web page and consuming Serverless APIs

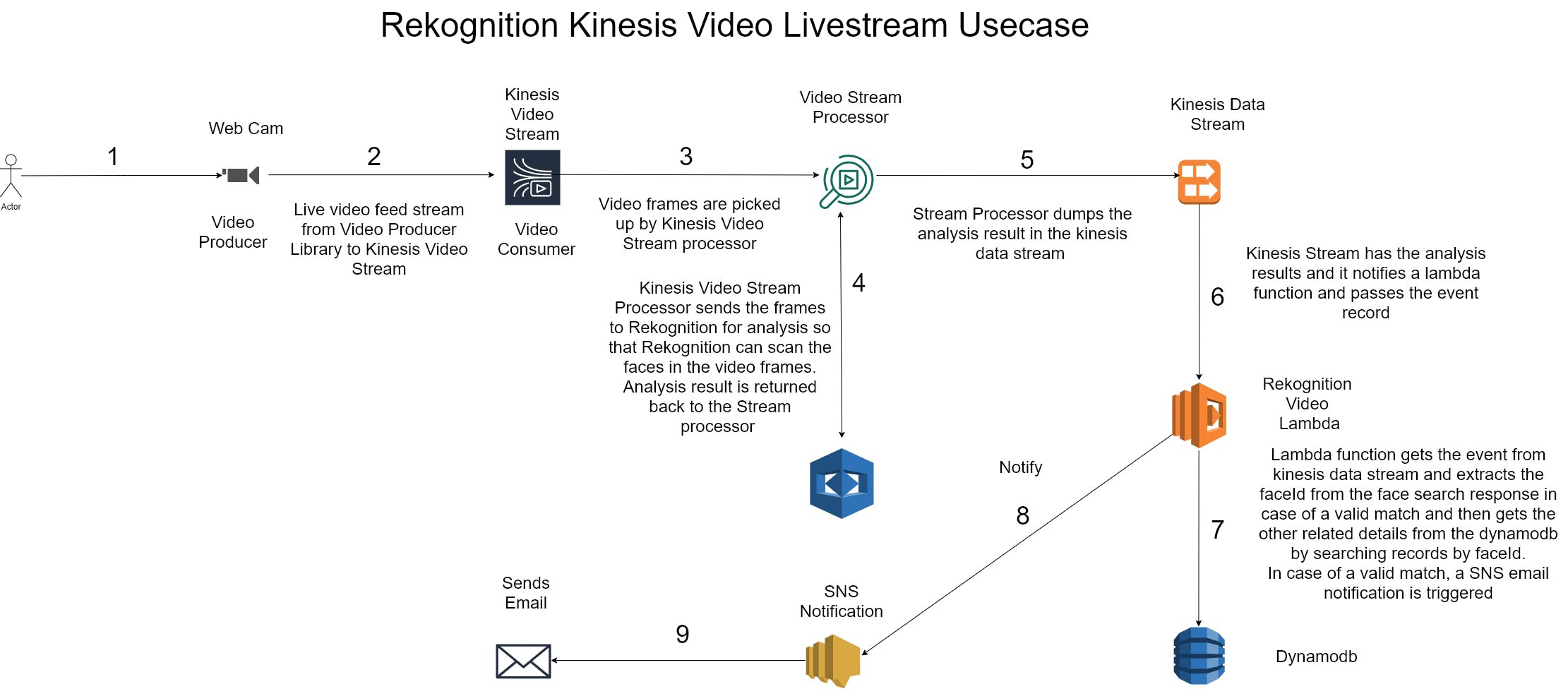

- Using AWS Rekognition Java Producer Library to consume live camera feed from Webcam and push video frames to Kinesis Video stream in the backend

AWS Cloud Components

S3 bucket for Storing images and offline video as well as the staging area to deploy build to Lambdas.

Lambdas for compute logic.

API gateway to expose Serverless APIs.

IAM / Cognito for access control.

AWS Rekognition to create image index and face match.

DynamoDB to store additional info of the subject along with their picture or portrait during the initial registration process

SNS Topic to trigger Email notifications when system detects a face match.

Kinesis stream to ingest live video feed from CCTV cameras, using Kinesis video stream producer Java library.

SAM/CloudFormation Templates for Infrastructure as Code.

AWS code star and Code pipeline using GIT for CI/CD.

Why serverless

Serverless is the way to go because there is no upfront cost and also the recurring cost for using the serverless components is quite cheap compared to the other managed services or running the application of EC2 instances itself. Scaling out in future should not be an issue because using serverless stack allows that flexibility and all we need to care about is to pay money as per the usage/load

High Level Summary

For our application we are using the following three S3 buckets-

imageupload bucket with public access and proper CORS settings.

lambdazip bucket with public access so that lambda zip can be accessed by cloud formation worker to be deployed during CI-CD process. This lambda is actually invoked when the event from kinesis video stream is propagated through Video Stream Processor to AWS Rekognition and the final analysis of the video frame is actually dumped onto the Kinesis Data stream.

webhosting bucket with public access for hosting a static website. This website is used to capture images and upload them to S3 bucket from where they are ingested by AWS Rekognition for further analysis using upload and search image Lambdas.

Cognitio Identity provider for unidentified access to put images on s3 bucket from the web page to capture real images from a webcam using webcam.js and upload it to s3 bucket for further processing to AWS Rekognition.

The webpage is hosted in an S3 bucket and exposes the functionality of upload image to a Rekognition collection as well as search the face in the image provided. The search is done against collection created in the Rekognition during the upload process.

Once the match is found an SNS email notification is sent to the registered email id.

AWS Rekognition as image identification and analysis service as well as live web cam feed analyzer.

AWS Dynamodb as key-store value for storing person details and analysis data returned by AWS Rekognition.

AWS IAM roles and policies to grant access to different AWS resources, for the scope of Proof of Concept, we have given unrestricted access to some of the resources, which can be further secured or tightened.

Lambda as serverless compute.

AWS API Gateway as edge component which exposes Lambda APIs.

AWS SAM template for Infrastructure as code (IaC).

AWS Kinesis Video stream to capture the live video feed using AWS Kinesis Video Stream Producer Java SDK which is consumed by Rekognition Video Stream Processor.

AWS Rekognition Stream Processor ingests the live feed from Kinesis Video Stream and send it for further processing to AWS Rekognition.

The result of analysis from the previous step is dumped on to the AWS Kinesis Data Stream.

AWS Kinesis Data stream has the analysis from AWS Rekognition and the event is delegated to lambda to find a match in Dynamodb, if the match is found an email notification via SNS is triggered.

The lambda is deployed in an S3 bucket as zip included all its dependencies using python virtual env packaging.

AWS SNS email Notification to send the details of the person found in the image to a fixed email address which subscribed for SNS email notification.

AWS Code star for the CI-CD process and deployment

Source Code

Front end Static Html :- %[github.com/kunalsumbly/faceIdAppWeb]

AWS Rekognition Video Stream Producer Java SDK :- %[github.com/kunalsumbly/amazon-kinesis-vide…]

Back end Serverless code and SAM template :- %[github.com/kunalsumbly/faceIdAppServerless]

Demo video:- %[youtu.be/1LHmFL7rgbg] The video is crude and raw without any marketing touch, so please be nice :-). I am still learning the tricks of the trade.

Finally , what caught my eye in the recent AWS re:Invent 2020

Container Image support for Lambdas

In my opinion supporting this feature will standardise the CI-CD process for a lot of enterprises which already use AWS serverless stack for some of their compute along with long running processes using EC2, ECS or EKS. If we particularly talk about CI-CD processes, the majority of enterprises use docker images for deploying code and dependencies across multiple environment, the only exception probably was lambda deployment where the code was still deployed as a bundle. This new feature solves this problem. More on this aws.amazon.com/blogs/aws/new-for-aws-lambd…

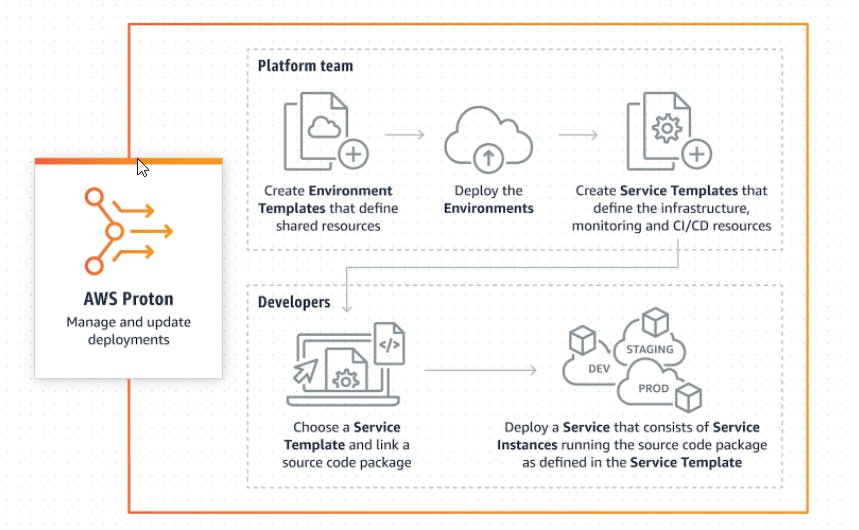

AWS Proton

Infrastructure as Code (IaC) is a powerful concept but we all know about challenges in managing multiple environments and the configuration drift involved in the absence of a streamlined process for managing and deploying infrastructure.

And this is where AWS Proton comes in

As shown in the diagram above, Proton is an opinionated , 'self-service' CI-CD workflow (separate workflow for Platform team which then acts as an input to trigger a separate workflow for applications /dev team).

The idea is to save time by creating common templates which can then be used to provision infrastructure with same secure building blocks. This is a boon for large enterprises that run hundred and thousands of applications which are managed by disparate teams.

AWS Proton :- aws.amazon.com/proton

AWS Proton roadmap:- github.com/aws/aws-proton-public-roadmap/i…

PS:- Special thanks to Rishu and Harry, without their help I could not have done this.