TL;DR: Scaling nodes in a Kubernetes cluster could take several minutes with the default settings. Learn how to size your cluster nodes and proactively create nodes for quicker scaling.

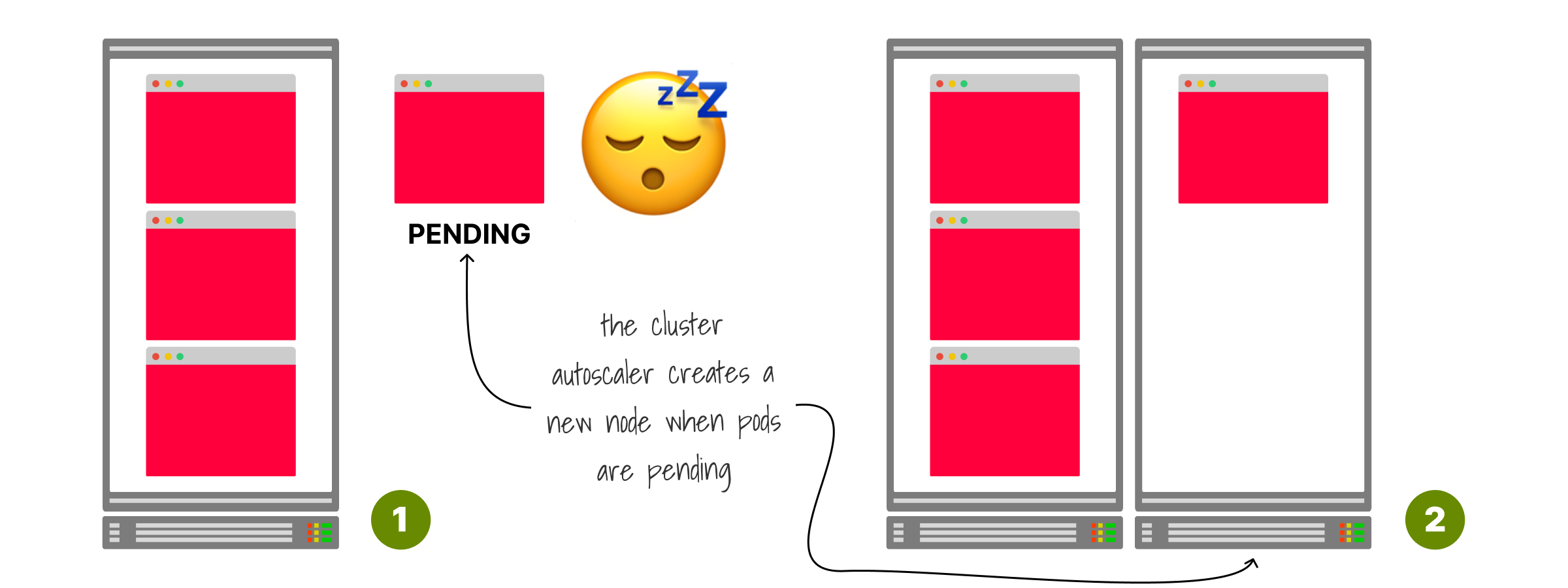

When your Kubernetes cluster runs low on resources, the Cluster Autoscaler provision a new node and adds it to the cluster.

The cloud provider has to create a virtual machine from scratch, provision it and connect it to the cluster.

The process could easily take more than a few minutes from start to end.

During this time, your app is trying to scale and can easily be overwhelmed with traffic because it isn't able to replicate itself.

How can you fix this?

Let's first review how the Kubernetes Cluster Autoscaler (CA) works.

The autoscaler doesn't scale on memory or CPU.

Instead, it reacts to events and checks for any unschedulable Pods every 10 seconds.

A pod is unschedulable when the scheduler cannot find a node that can accommodate it.

However, under heavy load, if the pod is unschedulable, it's already too late.

You can't wait minutes for the node to be created — you need it now!

To work around this issue, you could always configure the cluster autoscaler to provision an extra node instead of being reactive.

Unfortunately, there is no "provision an extra spare node" setting in the cluster autoscaler.

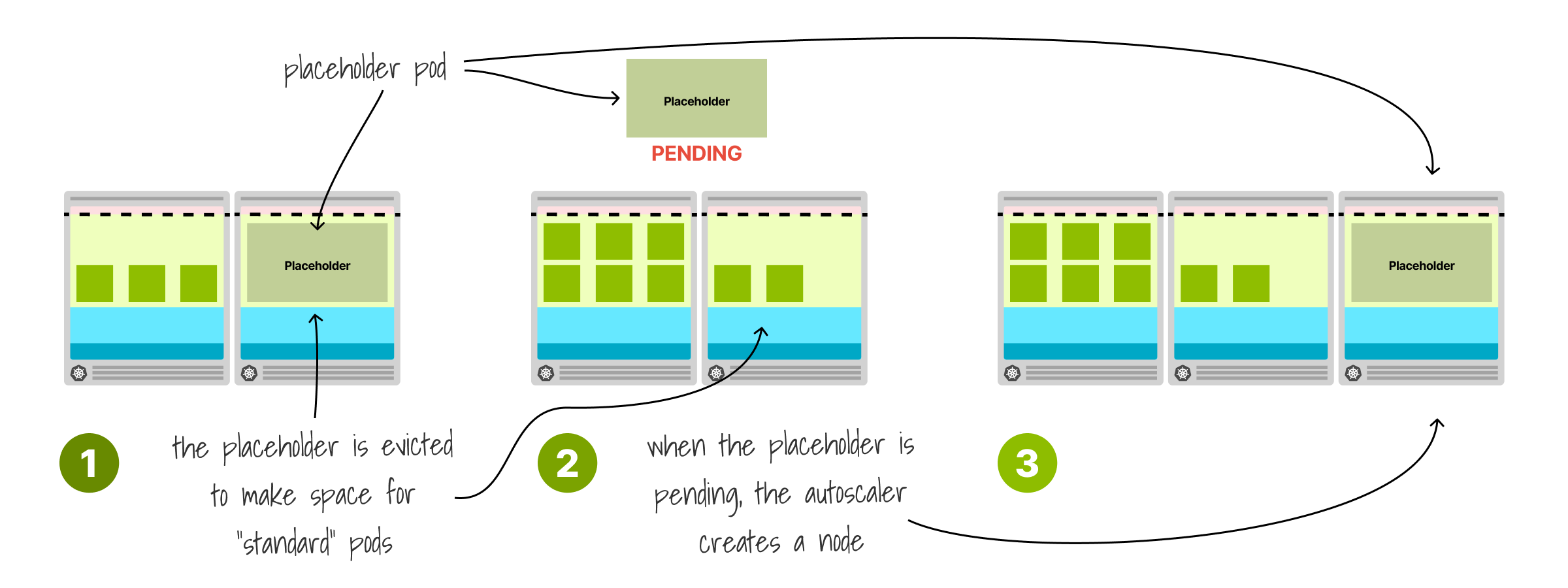

But you can get close with a clever workaround: a big placeholder pod.

A pod that does nothing but triggers the autoscaler in advance.

How big should this pod be, exactly?

Kubernetes nodes reserve memory and CPU for kubelet, OS, eviction threshold, etc.

If you exclude those from the total node capacity, you obtain the actual resources allocatable for pods.

You should make the placeholder pod use all of them.

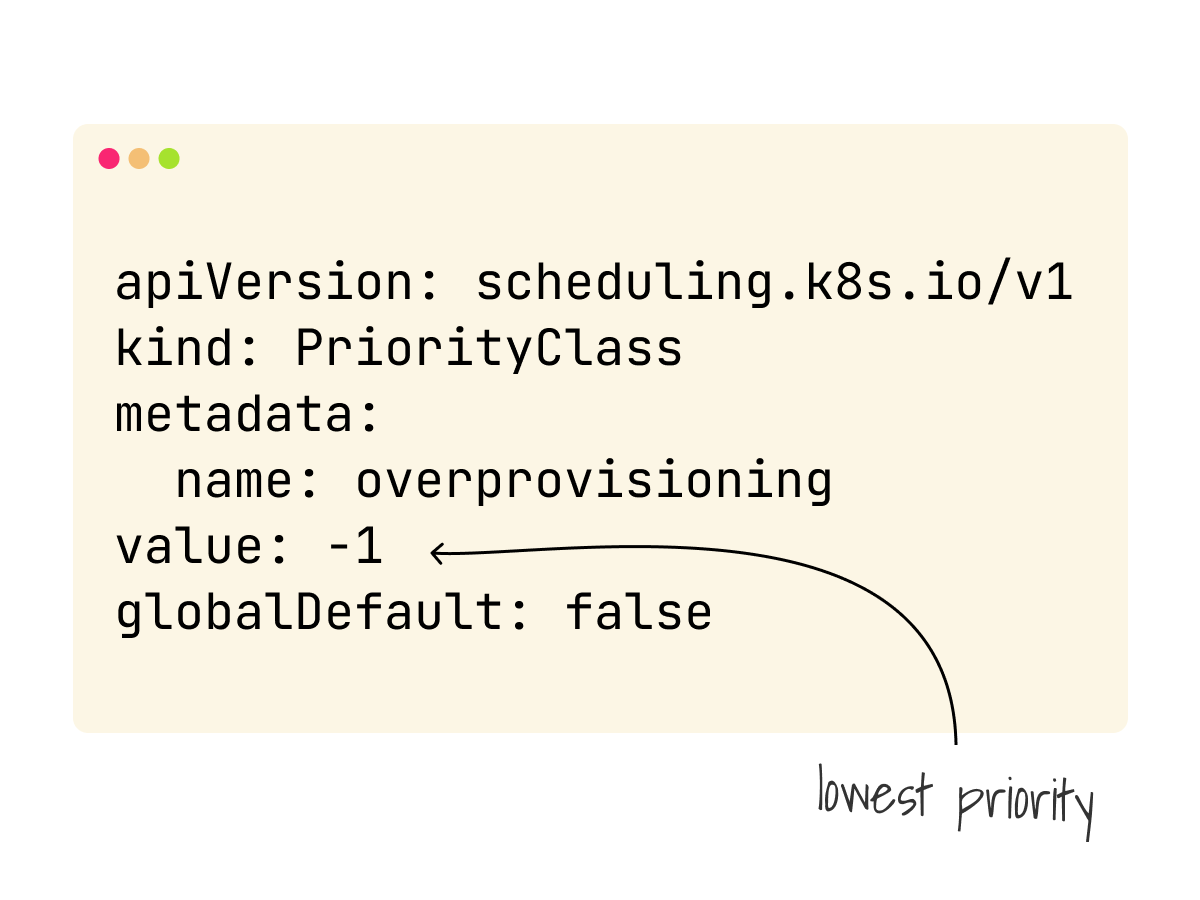

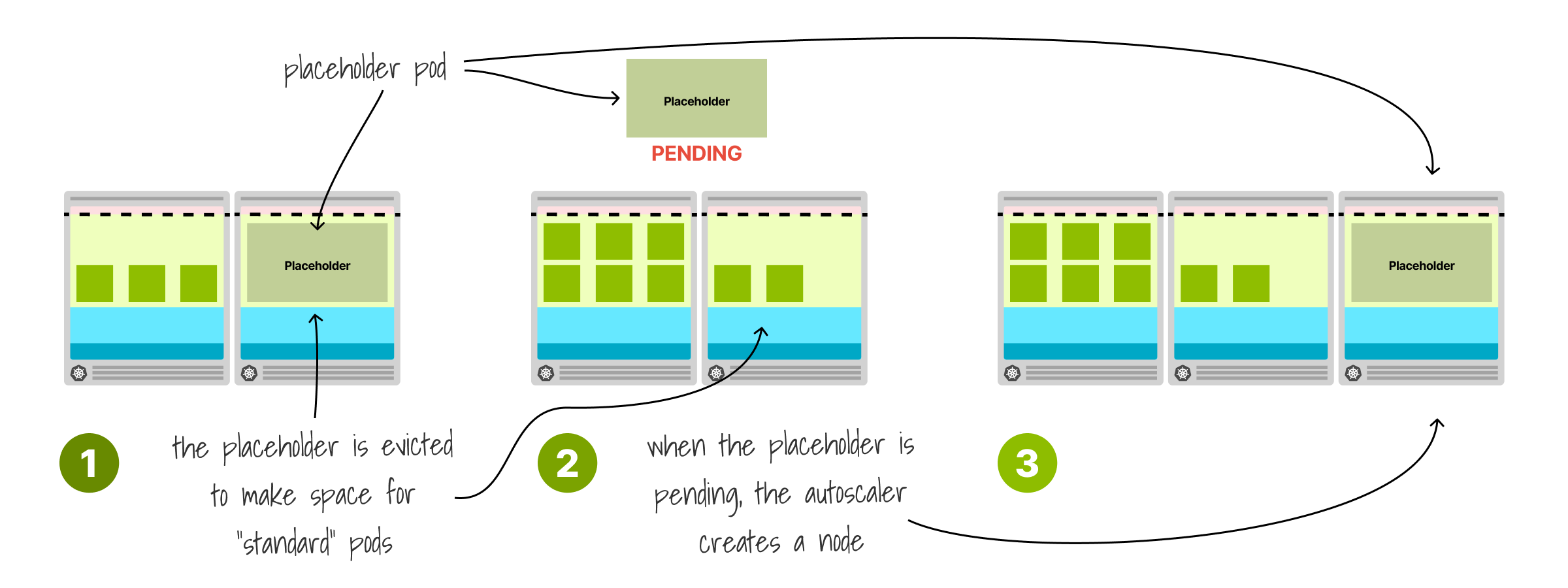

The placeholder pod competes for resources, but you could remove it as soon as you need more space with a Pod PriorityClass.

With a priority of -1, all other pods will have precedence, and the placeholder is evicted as soon as the cluster runs out of space.

What happens when the placeholder evicts because a pod needs to be deployed?

Since it needs a full node to run, it will stay pending and trigger the cluster autoscaler.

That's excellent news: you are making room for new pods and creating nodes proactively.

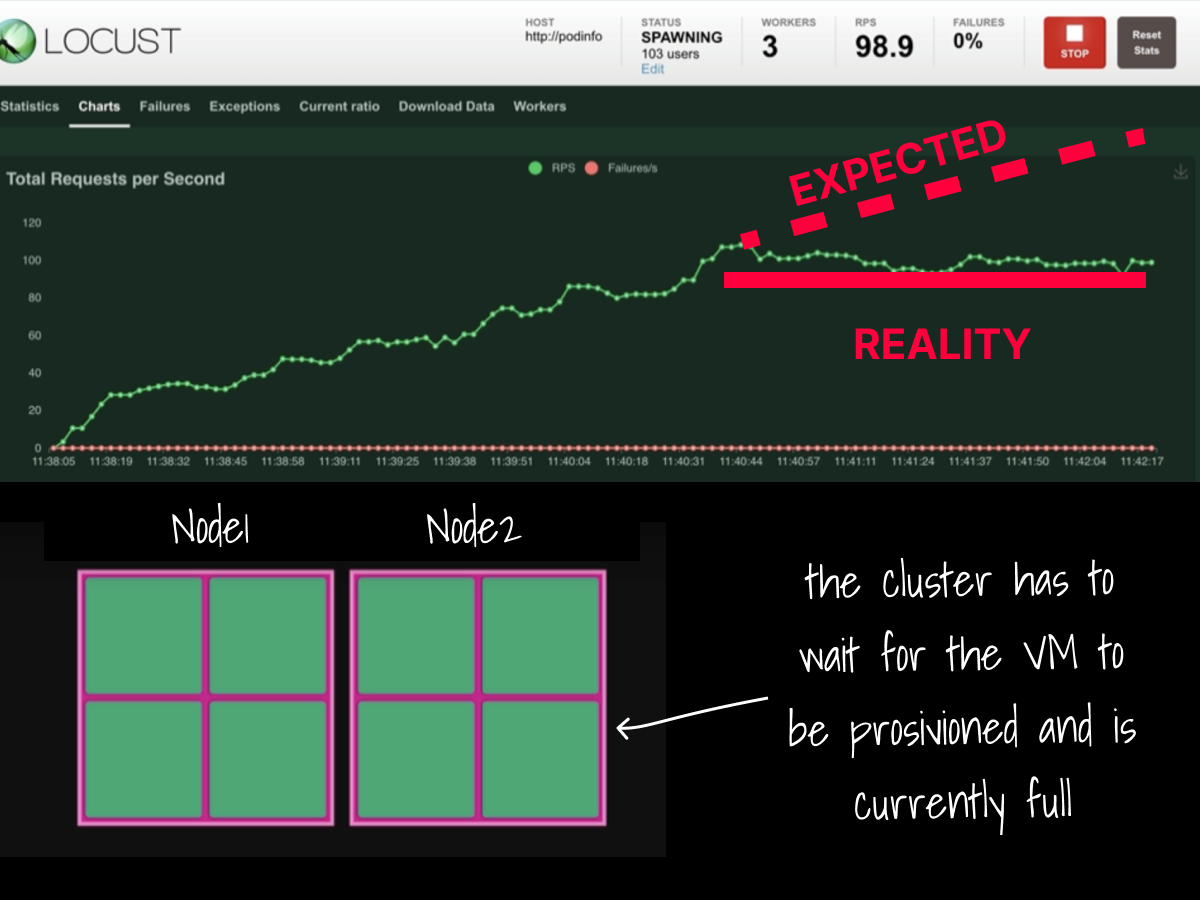

To understand the implication of this change, let's observe what happens when you combine the cluster autoscaler with the horizontal pod autoscaler but don't have the placeholder.

The app cannot keep up with the traffic, and the response flattens.

Compare the same traffic to a cluster with the placeholder pod.

The autoscaler takes half the time to scale to an equal number of replicas.

The requests per second don't flatten but grow with the concurrent users.

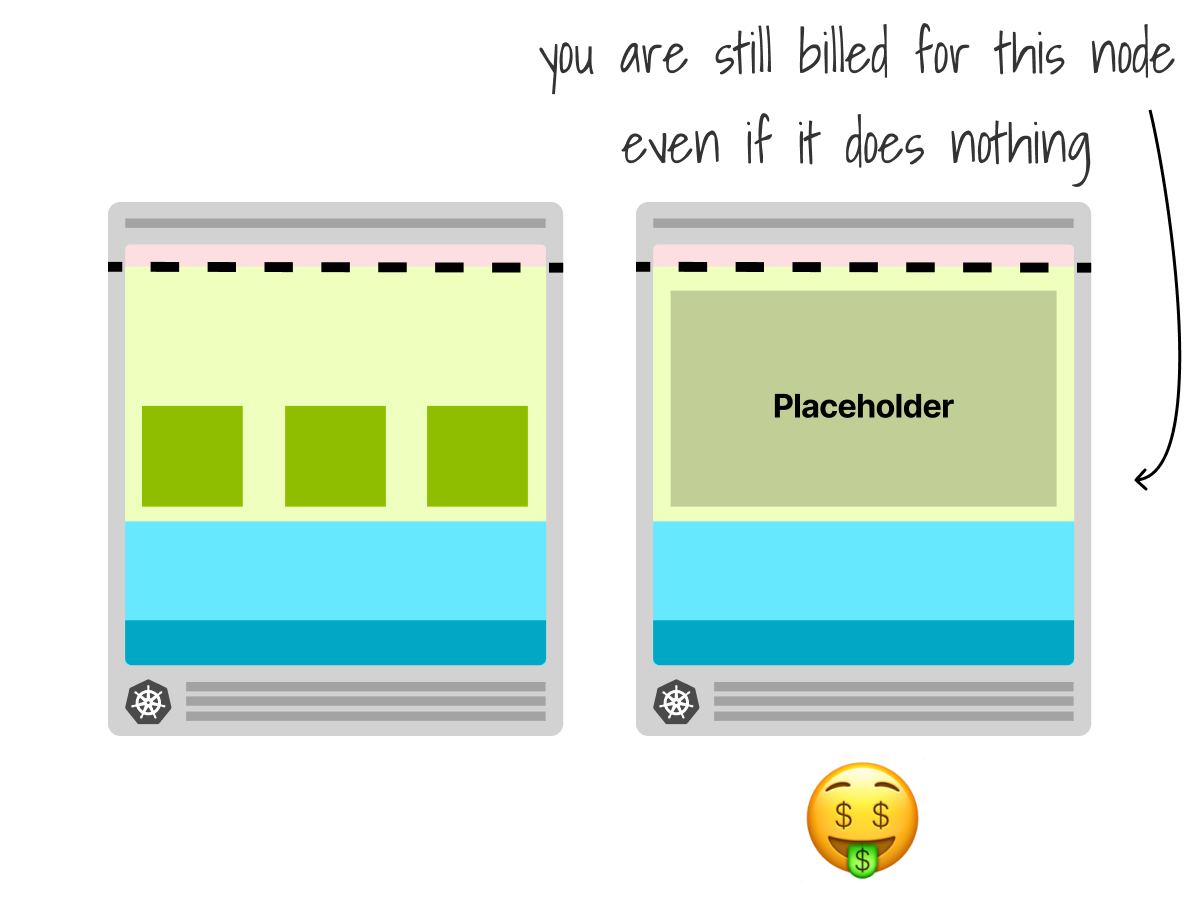

The placeholder makes for a great addition but comes with extra costs.

You need to keep a spare node idle most of the time.

You will still get billed for that compute unit.

And finally, you can make your cluster autoscaler more efficient if you don't use it!

The size of the node dictates how many pods can be deployed.

If the node is too small, a lot of resources are wasted.

Instead, you could find just the right size for the pod you have so that you fill your instance in total, reducing wasted resources.

To help with that task, you can try this handy calculator!

learnk8s.io/kubernetes-instance-calculator

If you wish to see this in action, Chris Nesbitt-Smith demos the proactive scaling here event.on24.com/wcc/r/3919835/97132A990504B…

And finally, if you've enjoyed this thread, you might also like the Kubernetes workshops that we run at Learnk8s learnk8s.io/training or this collection of past Twitter threads twitter.com/danielepolencic/status/1298543…

Until next time!