I work with many startups and sometimes I want access to their EC2 instances on AWS. When I ask, often people send me a private key (a PEM file) to login! This distresses me to no end.

Guys, didn't you know that a private key is, ahem, private?

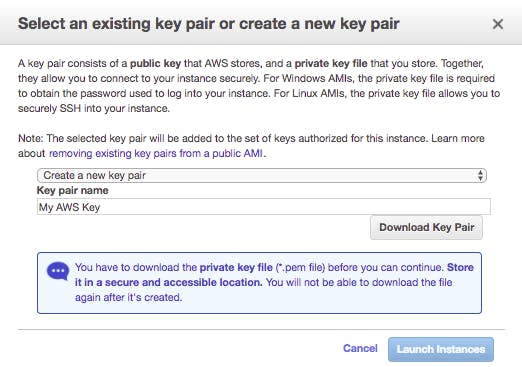

When I thought about it, it occurred to me that one of the biggest culprits is AWS itself, because it gives an option to create a new SSH key pair while launching an instance. Most people find this so convenient, and the fact that AWS gives you this option, they think this is the right thing to do.

This post is about 8 things that you can and should do to be secure in accessing EC2 instances. You have to do these things just once and then you're all set to launch new EC2 instances in a safe, secure and convenient manner.

It's all about getting over the Potential Barrier, a term that's rooted in Quantum Physics. I like the concept so much because I find it applies to many things in life. It's like going from a plateau to the plains, but there's a hill (the potential barrier) in the middle. You're happiest in the plains, but it's a hard climb to get to the downslope. So you stay in the plateau, unhappy.

If you read and follow the 8 things suggested in this post, you'll find that the climb is not so hard after all, and it's really worth it.

Have one SSH key per person

It's best to have only one key per person, even across different laptops and desktops. When you have to upgrade your laptop, don't generate a new SSH key, instead, transfer the old one to your new laptop and delete it from the old one.

Never, ever, create a shared SSH key for multiple people to use.

What if there are many in your team who launch EC2 instances? Read on, you'll see how many people can launch and give access to instances without sharing a key.

Guard your SSH key

With you life, if you can. Seriously. Don't ever share your private key with anyone. Encrypt your hard disk so that only you can use your key.

Linux distributions have an Encrypt Home Folder option. Mac users can turn on FileVault in their Privacy and Security preferences. If your OS doesn't have this feature, ensure that the while generating the SSH key, you give it a password.

All this is so that even if you lose your laptop, no one can get to your private key. Note that have just a login password without disk encryption is not good enough. If someone has physical access to the hard disk, they can mount it on a different computer and read the disk contents.

I've found that it's not that hard to guard my SSH private key because there is only one key I have to deal with.

Upload your SSH key pair to AWS

You can do this using Import Key Pair under Key Pairs in the EC2 console. If multiple people in your team launch instances, import all their key pairs. Whoever is launching an instance should use their own key pair at the time of launch.

If you have a common key generated by AWS some time back, delete it. Now!

Sharing a key seems the most convenient thing to do when you start. But think about what'll happen if one person leaves your team. You can't remove their access without removing everyone's access. That can be quite a hassle.

Create individual login IDs

That is, do not use the default user (e.g., ec2-user or ubuntu) to login. Better still, remove this user once you've ensured the other users can login. Use only your own ID to login to any instance. Further, make everyone use their one and only SSH key to login.

Having individual user IDs not only leaves a trail of who logged in when and did what, it also un-clutters the default login's home directory, so that each user may keep their temporary files in their own home directories.

If you have many users who need access to the instances, creating users and giving access manually can become tedious. The trick is to automate it using AWS' Launch Initialization technique. The gist of it is that you create a standard script (or a few of them, depending on your needs) that will be run on every launch, and upload that script while launching an instance as User Data:

I've cut out some of the middle portions of the screenshot, but you should be able to see that the User Data is part of "Advanced Details", but this is hidden by default. You'll need to click on "Advanced Details" to expand or show it. Then click on "Choose file" to upload a script that runs on launch.

I've created an example script and shared it in this gist. It's designed for Ubuntu servers, so, if you are using Amazon Linux or other variants, you may need to tweak it a bit. The script does the following:

- Create individual User IDs. You know the list of users and their public keys, so list them in this script.

- Give some of them sudo access, but give everyone read rights to all the log files by adding them to groups such as

adm. - Add their SSH public key to their own

authorized_keysso they can login as themselves.

Another way to automate this is by doing all this manually on one instance and creating an AMI based on that instance. All future launches can now use this custom AMI.

Don't give sudo access to everyone

Give only the amount of access that people need. This is not because some folks are more privileged than others, I know that you trust all your team members :-). Instead, this is more so that people don't do damaging stuff without meaning to.

I have seen people doing sudo su rm -rf /. Really. Not that they wanted to do it, it's just that before they typed something/else, after the initial /, they happened to hit the Enter key by mistake!

To prevent such mishaps, ensure that read-only users do not have sudo access. Add them to groups such as adm that have read access to whatever they need, typically log files for troubleshooting. This also helps people who do have sudo access, because, otherwise they just tend to do sudo su as soon as they login (since pretty much everything needs sudo access).

Having to use sudo should be a rare thing, and never for read access. If someone is doing sudo su as soon as they login, revisit your permissions and groups.

Hide non-public instances

Many instances that you launch will very likely not need to be accessed from the internet, except for SSH logins from you and your team. These are typically databases, caches etc., which don't need access from the internet. Let's call them private instances. The best thing to do from a security standpoint is to make these invisible to prying eyes on the internet. There are different ways to do this (simplest to hardest):

- Launch private instances in the Default VPC, use AWS security groups to prevent inbound access to all ports except from known security groups.

- Launch private instances in the Default VPC, don't assign a public IP. As long as these instances don't need to access the internet, this works.

- Create a VPC with public and private subnets, including a NAT for the private instances to access the internet.

Whichever one you choose, for SSH access, you will need one instance that is accessible from the internet and acts as the entry point into your network. Let's call the entry point instance, the gateway. To login to any of the private instances, your team will need to login to the gateway, and then hop on to the other instances using the private IP addresses.

But what about login IDs and SSH keys?

One option is to have common keys and login IDs for all the private instances. We already saw why it's good to have a different ID for each user, and there's no reason why it cannot apply to private instances. But at first glance, this seems hard to achieve.

Do we generate SSH keys for each user on the gateway? We know that this is not a good practice, everyone should have only one key pair. Should we then copy the private key (of the one and only key) of each user to the gateway so that they can hop on from there? That doesn't sound good either, we're supposed to guard our keys carefully, and this exposes the private keys to anyone having sudo access on the gateway.

Use SSH Agent Forwarding

This is where the Agent Forwarding feature of SSH comes in very handy. This feature essentially says:

Make the key from my laptop available at the gateway, but only after I login to the gateway. And only to me.

There is an excellent description of how this works in the Ilustrated Guide to SSH Agent Forwarding. To use Agent Forwarding, you will need to do the following:

ssh-agentmust be running on your laptop. This is automatic for Linux and Mac, but on Windows, you'll need to manually start it.- You'll have to make your key available to the agent by adding it using

ssh-add, where is usually~/.ssh/id_rsa. - Use the

-Acommand-line option when starting SSH, for example:ssh -A gateway.hashnode.com.

If you find yourself forgetting the -A too often, you could set up SSH config so that -A option is automatically added for all hosts, or for specific hosts where you want this to work. My SSH config (~/.ssh/config) looks like this, for example:

Host gateway.hashnode.com # use * for all hosts

ForwardAgent Yes

Agent Forwarding works well with GitHub too, which means that once you login to the gateway, you can access your repos as yourself using your one and only SSH key. But typically, the gateway is only a temporary hop, you'll need GitHub access from another remote server, which is a hop away.

To make Agent Forwarding work across such hops, all you need to do is enable it (either via the SSH config, or using -A) on each of the intermediate hops. For instance, to enable git access from a server inside the VPC, you'll have to set ForwardAgent to Yes in the SSH config file on the gateway.

Note that on a Mac, a reboot "forgets" the ssh-add command, so you'll have to do it after every reboot. Alternatively, you can use the Keychain as described at the end of GitHub's Using SSH agent forwarding guide.

Use SSH Port Forwarding

Many are used to GUI based tools to access servers like phpMyAdmin and MySQL Workbench. What if these are private servers within the VPC, how does one use these tools, as servers' IP address is not exposed to the internet, or access is blocked?

The Port Forwarding feature of SSH comes to the rescue in this case. Let's say we want to access a MySQL server at 10.0.1.141, which is a private instance. This is how you would start ssh from your laptop:

$ ssh -L3000:10.0.1.141:3306 gateway.hashnode.com

The above -L command line option of SSH essentially says this:

Forward all network traffic on localhost port 3000 to 10.0.1.141, port 3306.

Now, if you open up MySQL Workbench and connect to localhost or 127.0.0.1 port 3000, you'll be shown 10.0.1.141's database contents!

I picked port 3000 as the local port, because I have my own MySQL running on my laptop using 3306. Using 3306 as the local port for forwarding would conflict with my local MySQL. You can pick any port that's convenient for you, but it should not be a port that's already used.

Conclusion

It's not at all hard to follow the best practices in setting up users and SSH access to EC2 instances. Most people don't follow these only because they don't know what's possible, and also feel that it's hard. I hope this post proves helpful to you and going forward, you'll stop sharing SSH keys and login IDs.

I have dealt with only AWS EC2 here, but the concepts are similar, if not the same for other cloud providers such as Azure and Digital Ocean. You'll find that you can apply these ideas (with some tweaking) to those as well.

Also, Putty on Windows has the same features of Agent Forwarding and Port Forwarding. I haven't explained them, but you should be able to figure them out yourself, or do a little bit of searching on the internet should guide you.

So, go ahead, climb that potential barrier and secure your EC2 instances!